Many of the biggest names in artificial intelligence have signed a short statement warning that their technology could spell the end of the human race.

Published Tuesday, the full statement states: “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

The statement was posted to the website of the Center for AI Safety, a San Francisco-based nonprofit organization. It’s signed by almost 400 people, including some of the biggest names in the field — Sam Altman, CEO of OpenAI, the company behind ChatGPT, as well as top AI executives from Google and Microsoft and 200 academics.

The statement is the most recent in a series of alarms raised by AI experts — but also one that stoked growing pushback against a focus on what some see as overhyped hypothetical harms from AI.

Meredith Whittaker, president of the encrypted messaging app Signal and chief adviser to the AI Now Institute, a nonprofit group devoted to ethical AI practices, mocked the statement as tech leaders overpromising their product.

Clément Delangue, co-founder and CEO of the AI company Hugging Face, tweeted a picture of an edited version of the statement subbing in “AGI” for AI.

AGI stands for artificial general intelligence, which is a theoretical form of AI that is as capable or more capable than humans.

The statement comes two months after a different group of AI and tech leaders, including Tesla owner Elon Musk, Apple co-founder Steve Wozniak, and IBM chief scientist Grady Booch, signed a petition calling for a “pause“ on all large-scale AI research that was open to the public. None of them have yet signed the new statement, and such a pause has not happened.

Altman, who has repeatedly called for AI to be regulated, charmed Congress earlier this month. He held a private dinner with dozens of House members and was the subject of an amicable Senate hearing, where he became the rare tech executive whom both parties warmed to.

Altman’s calls for regulation have had their limits. Last week, he said that OpenAI could leave the European Union if AI became “overregulated.”

While the White House has announced some plans to address AI, there is no indication that the United States has imminent plans for large-scale regulation of the industry.

Gary Marcus, a leading AI critic and a professor emeritus of psychology and neural science at New York University, said that while potential threats from AI are very real, it’s distracting to only worry about a hypothetical worst-case scenario.

“Literal extinction is just one possible risk, not yet well-understood, and there are many other risks from AI that also deserve attention,” he said.

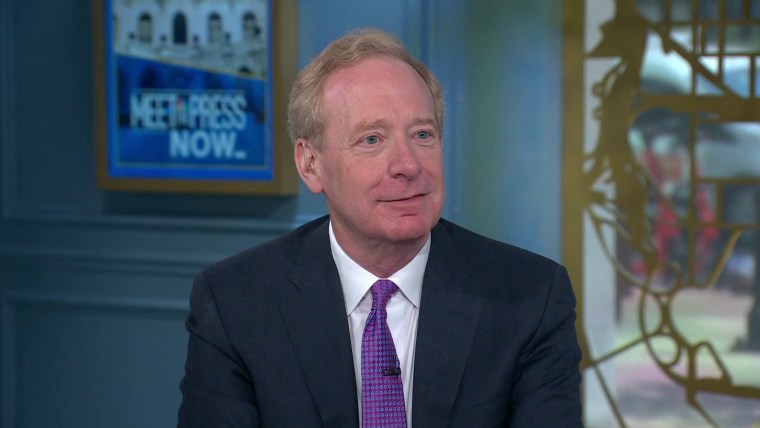

Some tech experts have said that more mundane and immediate uses of AI are a bigger threat to humanity. Microsoft President Brad Smith has said that deepfakes and the potential that they would be used for disinformation are his biggest worries about the technology.

Last week, markets briefly dipped after a fake, seemingly AI-generated image of an explosion near the Pentagon went viral on Twitter.

Latest Breaking News Online News Portal

Latest Breaking News Online News Portal