Digitally edited pornographic videos featuring the faces of hundreds of unconsenting women are attracting tens of millions of visitors on websites, one of which can be found at the top of Google search results.

The people who create the videos charge as little as $5 to download thousands of clips featuring the faces of celebrities, and they accept payment via Visa, Mastercard and cryptocurrency.

While such videos, often called deepfakes, have existed online for years, advances in artificial intelligence and the growing availability of the technology have made it easier — and more lucrative — to make nonconsensual sexually explicit material.

An NBC News review of two of the largest websites that host sexually explicit deepfake videos found that they were easily accessible through Google and that creators on the websites also used the online chat platform Discord to advertise videos for sale and the creation of custom videos.

The deepfakes are created using AI software that can take an existing video and seamlessly replace one person’s face with another’s, even mirroring facial expressions. Some lighthearted deepfake videos of celebrities have gone viral, but the most common use is for sexually explicit videos. According to Sensity, an Amsterdam-based company that detects and monitors AI-developed synthetic media for industries like banking and fintech, 96% of deepfakes are sexually explicit and feature women who didn’t consent to the creation of the content.

Most deepfake videos are of female celebrities, but creators now also offer to make videos of anyone. A creator offered on Discord to make a 5-minute deepfake of a “personal girl,” meaning anyone with fewer than 2 million Instagram followers, for $65.

The nonconsensual deepfake economy has remained largely out of sight, but it recently had a surge of interest after a popular livestreamer admitted this year to having looked at sexually explicit deepfake videos of other livestreamers. Right around that time, Google search traffic spiked for “deepfake porn.”

The spike also coincided with an uptick in the number of videos uploaded to MrDeepFakes, one of the most prominent websites in the world of deepfake porn. The website hosts thousands of sexually explicit deepfake videos that are free to view. It gets 17 million visitors a month, according to the web analytics firm SimilarWeb. A Google search for “deepfake porn” returned MrDeepFakes as the first result.

In a statement to NBC News, a Google spokesperson said that people who are the subject of deepfakes can request removal of pages from Google Search that include “involuntary fake pornography.”

“In addition, we fundamentally design our ranking systems to surface high quality information, and to avoid shocking people with unexpected harmful or explicit content when they aren’t looking for it,” the statement went on to say.

Genevieve Oh, an independent internet researcher who has tracked the rise of MrDeepFakes, said video uploads to the website have steadily increased. In February, the website had its most uploads yet — more than 1,400.

Noelle Martin, a lawyer and legal advocate from Western Australia who works to raise awareness of technology-facilitated sexual abuse, said that, based on her conversations with other survivors of sexual abuse, it is becoming more common for noncelebrities to be victims of such nonconsensual videos.

“More and more people are targeted,” said Martin, who was targeted with deepfake sexual abuse herself. “We’ll actually hear a lot more victims of this who are ordinary people, everyday people, who are being targeted.”

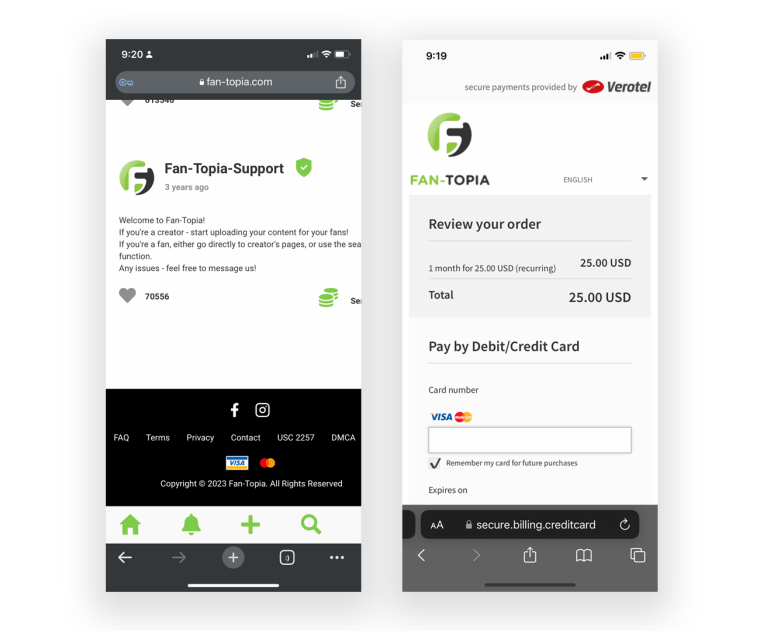

The videos on MrDeepFakes are usually only a few minutes long, acting like teaser trailers for much longer deepfake videos, which are usually available for purchase on another website: Fan-Topia. The website bills itself on Instagram as “the highest paying adult content creator platform.”

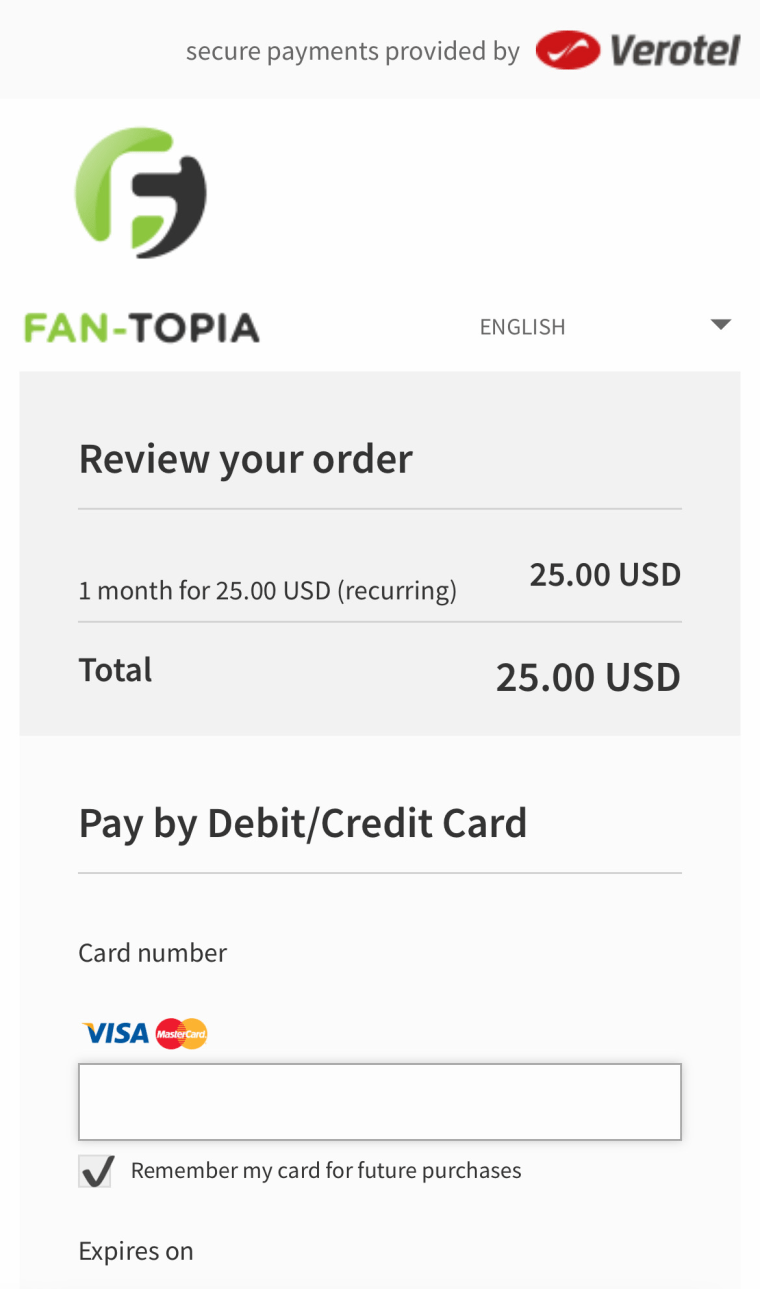

When deepfake consumers find videos they like on MrDeepFakes, clicking creators’ profiles often takes them to Fan-Topia links, where they can pay for access to libraries of deepfake videos with their credit cards. On the Fan-Topia payment page, the logos for Visa and Mastercard appear alongside the fields where users can enter credit card information. The purchases are made through an internet payment service provider called Verotel, which is based in the Netherlands and advertises to what it calls “high-risk” webmasters running adult services.

Verotel didn’t respond to a request for comment.

Some deepfake creators take requests through Discord, a chatroom platform. The creator of MrDeepFake’s most-watched video, according to the website’s view counter, had a profile and a chatroom on Discord where subscribers could message directly to make custom requests featuring a “personal girl.” Discord removed the server for violating its rules around “content or behavior that sexualizes or sexually degrades others without their apparent consent” after NBC News asked for comment.

The creator didn’t respond to a message sent over Discord.

Discord’s community guidelines prohibit “the coordination, participation, or encouragement of sexual harassment,” including “unwanted sexualization.” NBC News has reviewed other Discord communities devoted to creating sexually explicit deepfake images through an AI development method known as Stable Diffusion, one of which featured nonconsensual imagery of celebrities and was shut down after NBC News asked for comment.

In a statement, Discord said it expressly prohibits “the promotion or sharing of non-consensual deepfakes.”

“Our Safety Team takes action when we become aware of this content, including removing content, banning users, and shutting down servers,” the statement said.

In addition to making videos, Deepfake creators also sell access to libraries with thousands of videos for subscription fees as low as $5 a month. Others are free.

“Subscribe today and fill up your hard drive tomorrow!” a deepfake creator’s Fan-Topia description reads.

While Fan-Topia doesn’t explicitly market itself as a space for deepfake creators, it has become one of the most popular homes for them and their content. Searching “deepfakes” and terms associated with the genre on Fan-Topia returned over 100 accounts of deepfake creators.

Some of those creators are hiring. On the MrDeepFake Forums, a message board where creators and consumers can make requests, ask technical questions and talk about the AI technology, two popular deepfake creators are advertising for paid positions to help them create content. Both listings were posted in the past week and offer cryptocurrency as payment.

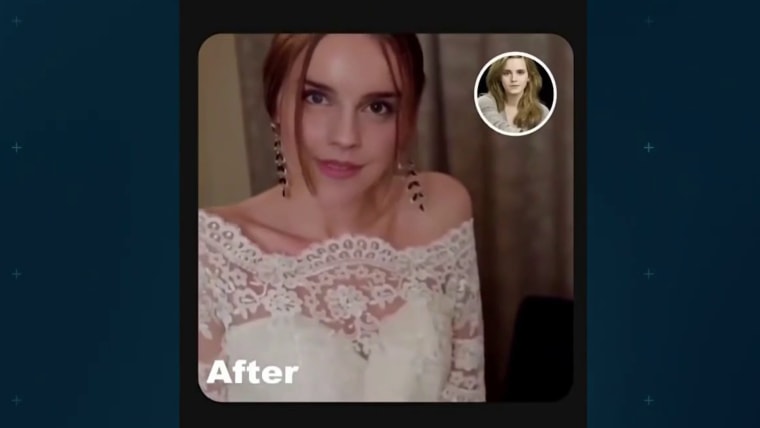

People from YouTube and Twitch creators to women who star in big-budget franchises are all commonly featured in deepfake videos on Fan-Topia and MrDeepFakes. The two women featured in the most content on MrDeepFakes, according to the website’s rankings, are actors Emma Watson and Scarlett Johansson. They were also featured in a sexually suggestive Facebook ad campaign for a deepfake face-swap app that ran for two days before NBC News reported on it (after the article was published, Meta took down the ad campaigns, and the app featured in them was removed from Apple’s App Store and Google Play).

“It’s not a porn site. It’s a predatory website that doesn’t rely on the consent of the people on the actual website,” Martin said about MrDeepFakes. “The fact that it’s even allowed to operate and is known is a complete indictment of every regulator in the space, of all law enforcement, of the entire system, that this is even allowed to exist.”

Visa and Mastercard have previously cracked down on their use as payment processors for sexually exploitative videos, but they remain available to use on Fan-Topia. In December 2020, after a New York Times op-ed said child sexual abuse material was hosted on Pornhub, the credit card companies stopped allowing transactions on the website. Pornhub said the assertion it allowed such material was “irresponsible and flagrantly untrue.” In August, the companies suspended payments for advertisements on Pornhub, too. Pornhub prohibits deepfakes of all kinds.

After that decision, Visa CEO and Chairman Al Kelly said in a statement that Visa’s rules “explicitly and unequivocally prohibit the use of our products to pay for content that depicts nonconsensual sexual behavior.”

Visa and Mastercard did not respond to requests for comment.

Other deepfake websites have found different profit models.

Unlike Fan-Topia and its paywalled model, MrDeepFakes appears to generate revenue through advertisements and relies on the large audience that has been boosted by its positioning in Google search results.

Created in 2018, MrDeepFakes has faced some efforts to shutter its operation. A Change.org petition to take it down created by the nonprofit #MyImageMyChoice campaign has over 52,000 signatures, making it one of the most popular petitions on the platform, and it has been shared by influencers targeted on the platform.

Since 2018, when consumer face-swap technology entered the market, the apps and programs used to make sexually explicit deepfakes have become more refined and widespread. Dozens of apps and programs are free or offer free trials.

“In the past, even a couple years ago, the predominant way people were being affected by this kind of abuse was the nonconsensual sharing of intimate images,” Martin said. “It wasn’t even doctored images.”

Now, Martin said, survivors of sexual abuse, both online and off, have been targeted with deepfakes. In Western Australia, Martin successfully campaigned to outlaw nonconsensual deepfakes and image-based sexual abuse, but, she said, law enforcement and regulators are limited by jurisdiction, because the deepfakes can be made and published online from anywhere in the world.

In the U.S., only four states have passed legislation specifically about deepfakes. Victims are similarly disadvantaged because of jurisdiction and because some of the laws pertain only to elections or child sex abuse material.

“The consensus is that we need a global, collaborative response to these issues,” Martin said.

Latest Breaking News Online News Portal

Latest Breaking News Online News Portal